Parsing - Introduction to Parsers

Last Updated :

28 Jan, 2025

Parsing, also known as syntactic analysis, is the process of analyzing a sequence of tokens to determine the grammatical structure of a program. It takes the stream of tokens, which are generated by a lexical analyzer or tokenizer, and organizes them into a parse tree or syntax tree.

The parse tree visually represents how the tokens fit together according to the rules of the language's syntax. This tree structure is crucial for understanding the program's structure and helps in the next stages of processing, such as code generation or execution. Additionally, parsing ensures that the sequence of tokens follows the syntactic rules of the programming language, making the program valid and ready for further analysis or execution.

What is the Role of Parser?

A parser performs syntactic and semantic analysis of source code, converting it into an intermediate representation while detecting and handling errors.

- Context-free syntax analysis: The parser checks if the structure of the code follows the basic rules of the programming language (like grammar rules). It looks at how words and symbols are arranged.

- Guides context-sensitive analysis: It helps with deeper checks that depend on the meaning of the code, like making sure variables are used correctly. For example, it ensures that a variable used in a mathematical operation, like

x + 2, is a number and not text. - Constructs an intermediate representation: The parser creates a simpler version of your code that’s easier for the computer to understand and work with.

- Produces meaningful error messages: If there’s something wrong in your code, the parser tries to explain the problem clearly so you can fix it.

- Attempts error correction: Sometimes, the parser tries to fix small mistakes in your code so it can keep working without breaking completely.

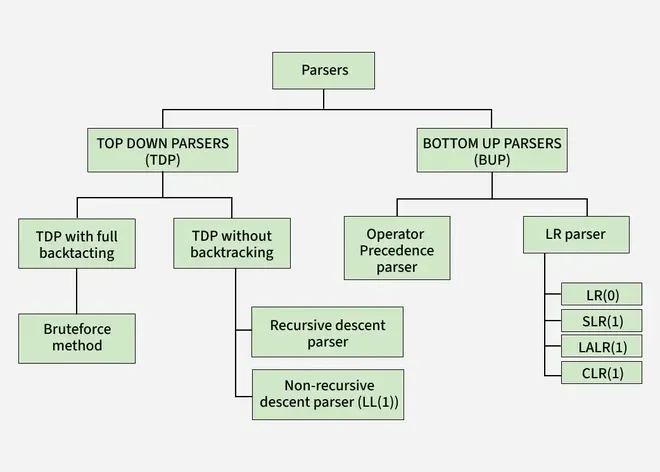

Types of Parsing

The parsing is divided into two types, which are as follows:

- Top-down Parsing

- Bottom-up Parsing

Top-Down Parsing

Top-down parsing is a method of building a parse tree from the start symbol (root) down to the leaves (end symbols). The parser begins with the highest-level rule and works its way down, trying to match the input string step by step.

- Process: The parser starts with the start symbol and looks for rules that can help it rewrite this symbol. It keeps breaking down the symbols (non-terminals) into smaller parts until it matches the input string.

- Leftmost Derivation: In top-down parsing, the parser always chooses the leftmost non-terminal to expand first, following what is called leftmost derivation. This means the parser works on the left side of the string before moving to the right.

- Other Names: Top-down parsing is sometimes called recursive parsing or predictive parsing. It is called recursive because it often uses recursive functions to process the symbols.

Top-down parsing is useful for simple languages and is often easier to implement. However, it can have trouble with more complex or ambiguous grammars.

Top-down parsers can be classified into two types based on whether they use backtracking or not:

1. Top-down Parsing with Backtracking

In this approach, the parser tries different possibilities when it encounters a choice, If one possibility doesn’t work (i.e., it doesn’t match the input string), the parser backtracks to the previous decision point and tries another possibility.

Example: If the parser chooses a rule to expand a non-terminal, and it doesn't work, it will go back, undo the choice, and try a different rule.

Advantage: It can handle grammars where there are multiple possible ways to expand a non-terminal.

Disadvantage: Backtracking can be slow and inefficient because the parser might have to try many possibilities before finding the correct one.

2. Top-down Parsing without Backtracking

In this approach, the parser does not backtrack. It tries to find a match with the input using only the first choice it makes, If it doesn’t match the input, it fails immediately instead of going back to try another option.

Example: The parser will always stick with its first decision and will not reconsider other rules once it starts parsing.

Advantage: It is faster because it doesn’t waste time going back to previous steps.

Disadvantage: It can only handle simpler grammars that don’t require trying multiple choices.

Read more about classification of top-down parser.

Bottom-Up Parsing

Bottom-up parsing is a method of building a parse tree starting from the leaf nodes (the input symbols) and working towards the root node (the start symbol). The goal is to reduce the input string step by step until we reach the start symbol, which represents the entire language.

- Process: The parser begins with the input symbols and looks for patterns that can be reduced to non-terminals based on the grammar rules. It keeps reducing parts of the string until it forms the start symbol.

- Rightmost Derivation in Reverse: In bottom-up parsing, the parser traces the rightmost derivation of the string but works backwards, starting from the input string and moving towards the start symbol.

- Shift-Reduce Parsing: Bottom-up parsers are often called shift-reduce parsers because they shift (move symbols) and reduce (apply rules to replace symbols) to build the parse tree.

Bottom-up parsing is efficient for handling more complex grammars and is commonly used in compilers. However, it can be more challenging to implement compared to top-down parsing.

Generally, bottom-up parsing is categorized into the following types:

1. LR parsing/Shift Reduce Parsing: Shift reduce Parsing is a process of parsing a string to obtain the start symbol of the grammar.

2. Operator Precedence Parsing: The grammar defined using operator grammar is known as operator precedence parsing. In operator precedence parsing there should be no null production and two non-terminals should not be adjacent to each other.

Difference Between Bottom-Up and Top-Down Parser

| Feature | Top-down Parsing | Bottom-up Parsing |

|---|

| Direction | Builds tree from root to leaves. | Builds tree from leaves to root. |

| Derivation | Uses leftmost derivation. | Uses rightmost derivation in reverse. |

| Efficiency | Can be slower, especially with backtracking. | More efficient for complex grammars. |

| Example Parsers | Recursive descent, LL parser. | Shift-reduce, LR parser. |

Read more about Difference Between Bottom-Up and Top-Down Parser.

Similar Reads

Introduction of Compiler Design A compiler is software that translates or converts a program written in a high-level language (Source Language) into a low-level language (Machine Language or Assembly Language). Compiler design is the process of developing a compiler.The development of compilers is closely tied to the evolution of

9 min read

Compiler Design Basics

Introduction of Compiler DesignA compiler is software that translates or converts a program written in a high-level language (Source Language) into a low-level language (Machine Language or Assembly Language). Compiler design is the process of developing a compiler.The development of compilers is closely tied to the evolution of

9 min read

Compiler construction toolsThe compiler writer can use some specialized tools that help in implementing various phases of a compiler. These tools assist in the creation of an entire compiler or its parts. Some commonly used compiler construction tools include: Parser Generator - It produces syntax analyzers (parsers) from the

4 min read

Phases of a CompilerA compiler is a software tool that converts high-level programming code into machine code that a computer can understand and execute. It acts as a bridge between human-readable code and machine-level instructions, enabling efficient program execution. The process of compilation is divided into six p

10 min read

Symbol Table in CompilerEvery compiler uses a symbol table to track all variables, functions, and identifiers in a program. It stores information such as the name, type, scope, and memory location of each identifier. Built during the early stages of compilation, the symbol table supports error checking, scope management, a

8 min read

Error Handling in Compiler DesignDuring the process of language translation, the compiler can encounter errors. While the compiler might not always know the exact cause of the error, it can detect and analyze the visible problems. The main purpose of error handling is to assist the programmer by pointing out issues in their code. E

5 min read

Language Processors: Assembler, Compiler and InterpreterComputer programs are generally written in high-level languages (like C++, Python, and Java). A language processor, or language translator, is a computer program that convert source code from one programming language to another language or to machine code (also known as object code). They also find

5 min read

Generation of Programming LanguagesProgramming languages have evolved significantly over time, moving from fundamental machine-specific code to complex languages that are simpler to write and understand. Each new generation of programming languages has improved, allowing developers to create more efficient, human-readable, and adapta

6 min read

Lexical Analysis

Introduction of Lexical AnalysisLexical analysis, also known as scanning is the first phase of a compiler which involves reading the source program character by character from left to right and organizing them into tokens. Tokens are meaningful sequences of characters. There are usually only a small number of tokens for a programm

6 min read

Flex (Fast Lexical Analyzer Generator)Flex (Fast Lexical Analyzer Generator), or simply Flex, is a tool for generating lexical analyzers scanners or lexers. Written by Vern Paxson in C, circa 1987, Flex is designed to produce lexical analyzers that is faster than the original Lex program. Today it is often used along with Berkeley Yacc

7 min read

Introduction of Finite AutomataFinite automata are abstract machines used to recognize patterns in input sequences, forming the basis for understanding regular languages in computer science. They consist of states, transitions, and input symbols, processing each symbol step-by-step. If the machine ends in an accepting state after

4 min read

Classification of Context Free GrammarsA Context-Free Grammar (CFG) is a formal rule system used to describe the syntax of programming languages in compiler design. It provides a set of production rules that specify how symbols (terminals and non-terminals) can be combined to form valid sentences in the language. CFGs are important in th

4 min read

Ambiguous GrammarContext-Free Grammars (CFGs) is a way to describe the structure of a language, such as the rules for building sentences in a language or programming code. These rules help define how different symbols can be combined to create valid strings (sequences of symbols).CFGs can be divided into two types b

7 min read

Syntax Analysis & Parsers

Syntax Directed Translation & Intermediate Code Generation

Syntax Directed Translation in Compiler DesignSyntax-Directed Translation (SDT) is a method used in compiler design to convert source code into another form while analyzing its structure. It integrates syntax analysis (parsing) with semantic rules to produce intermediate code, machine code, or optimized instructions.In SDT, each grammar rule is

8 min read

S - Attributed and L - Attributed SDTs in Syntax Directed TranslationIn Syntax-Directed Translation (SDT), the rules are those that are used to describe how the semantic information flows from one node to the other during the parsing phase. SDTs are derived from context-free grammars where referring semantic actions are connected to grammar productions. Such action c

4 min read

Parse Tree and Syntax TreeParse Tree and Syntax tree are tree structures that represent the structure of a given input according to a formal grammar. They play an important role in understanding and verifying whether an input string aligns with the language defined by a grammar. These terms are often used interchangeably but

4 min read

Intermediate Code Generation in Compiler DesignIn the analysis-synthesis model of a compiler, the front end of a compiler translates a source program into an independent intermediate code, then the back end of the compiler uses this intermediate code to generate the target code (which can be understood by the machine). The benefits of using mach

6 min read

Issues in the design of a code generatorA code generator is a crucial part of a compiler that converts the intermediate representation of source code into machine-readable instructions. Its main task is to produce the correct and efficient code that can be executed by a computer. The design of the code generator should ensure that it is e

7 min read

Three address code in CompilerTAC is an intermediate representation of three-address code utilized by compilers to ease the process of code generation. Complex expressions are, therefore, decomposed into simple steps comprising, at most, three addresses: two operands and one result using this code. The results from TAC are alway

6 min read

Data flow analysis in CompilerData flow is analysis that determines the information regarding the definition and use of data in program. With the help of this analysis, optimization can be done. In general, its process in which values are computed using data flow analysis. The data flow property represents information that can b

6 min read

Code Optimization & Runtime Environments

Practice Questions