Machine learning developers gained new abilities to develop and run their ML programs on the framework and hardware of their choice thanks to the OpenXLA Project, which today announced the availability of key open source components.

Data scientists and ML engineers often spend a lot of time optimizing their models to work on each hardware target. Whether they’re working in a framework like TensorFlow or PyTorch and targeting GPUs or TPUs, there was no way to avoid this manual effort, which consumed precious time and made it difficult to move applications at a later date.

This is the general problem targeted by the folks behind the OpenXLA Project, which was founded last fall and today includes Alibaba, Amazon Web Services, AMD, Apple, Arm, Cerebra Systems, Google, Graphcore, Hugging Face, Intel, Meta, and NVIDIA as its members.

By creating a unified machine learning compiler that works with a range of ML development frameworks and hardware platforms and runtimes, OpenXLA can accelerate the delivery of ML applications and provide greater code portability.

Today, the group announced the availability of three open source tools as part of the project. XLA is an ML compiler for CPUs, GPUs, and accelerators; StableHLO is an operation set for high-level operations (HLO) in ML that provides portability between frameworks and compilers; while IREE (Intermediate Representation Execution Environment) is an end-to-end MLIR (Multi-Level Intermediate Representation) compiler and runtime for mobile and edge deployments. All three are available for download from the OpenXLA GitHub site

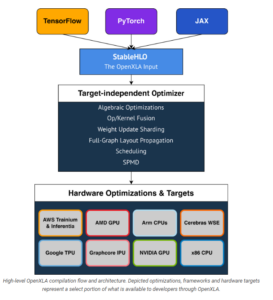

Initial frameworks supported by OpenXLA including TensorFlow, PyTorch, and JAX, a new Google framework JAX is designed for transforming numerical functions, and is described as bringing together a modified version of autograd and TensorFlow while following the structure and workflow of NumPy. Initial hardware targets and optimizations include Intel CPU, Nvidia GPUs, Google TPUs, AMD GPU, Arm CPUs, AWS Trainium and Inferentia, Graphcore’s IPU, and Cerebras Wafer-Scale Engine (WSE). OpenXLA’s “target-independent optimizer” targets albebraic functions, op/kernel fusion, weight update sharding, full-graph layout propagation, scheduling, and SPMD for parallelism.

The OpenXLA compiler products can be used with a variety of ML use cases, including full-scale training of massive deep learning models, including large language models (LLMs) and even generative computer vision models like Stable Diffusion. It can also be used for inference; Waymo already uses OpenXLA for real-time inferencing on its self-driving cars, according to a post today on the Google open source blog.

The OpenXLA compiler ecosystem provides portability between ML development tools and hardware targets (Image source OpenXLA Project)

OpenXLA members touted some of their early successes with the new compiler. Alibaba, for instance, says it was able to train a GPT2 model on Nvidia GPUs 72% faster using OpenXLA, and saw an 88% speedup for a Swin Transformer training task on GPUs.

Hugging Face, meanwhile, said it saw about a 100% speedup when it paired XLA with its text generation model written in TensorFlow. “OpenXLA promises standardized building blocks upon which we can build much needed interoperability, and we can’t wait to follow and contribute!” said Morgan Funtowicz, head of machine learning optimization for the Brooklyn, New York, company.

Facebook was able to “achieve significant performance improvements on important projects,” including using XLA on PyTorch models running on Cloud TPUs, said Soumith Chintala, the lead maintainer for PyTorch.

The chip startups are pleased for XLA, which reduces the risks of adopting relatively new, unproven hardware for customers. “Our IPU compiler pipeline has used XLA since it was made public,” said David Norman, Graphcore’s director of software design. “Thanks to XLA’s platform independence and stability, it provides an ideal frontend for bringing up novel silicon.”

“OpenXLA helps extend our user reach and accelerated time to solution by providing the Cerebras Wafer-Scale Engine with a common interface to higher level ML frameworks,” says Andy Hock, a vice president and head of product at Cerebras. “We are tremendously excited to see the OpenXLA ecosystem available for even broader community engagement, contribution, and use on GitHub.”

AMD and Arm, which are battling bigger chipmakers for pieces of the ML training and serving pies, are also happy members of the OpenXLA Project.

“We value projects with open governance, flexible and broad applicability, cutting edge features and top-notch performance and are looking forward to the continued collaboration to expand open source ecosystem for ML developers,” Alan Lee, AMD’s corporate vice president of software development, said in the blog.

“The OpenXLA Project marks an important milestone on the path to simplifying ML software development,” said Peter Greenhalgh, vice president of technology and fellow at Arm. “We are fully supportive of the OpenXLA mission and look forward to leveraging the OpenXLA stability and standardization across the Arm Neoverse hardware and software roadmaps.”

Curiously absent are IBM, which continues to innovate on chips with its Power10 processor, and Microsoft, the world’s second largest provider behind AWS.

Related Items:

Google Announces Open Source ML Compiler Project, OpenXLA

AMD Joins New PyTorch Foundation as Founding Member

Inside Intel’s nGraph, a Universal Deep Learning Compiler

July 17, 2025

- Galileo Announces Free Agent Reliability Platform

- Hydrolix Adds Support for Cloudflare HTTP Logs, Delivering Real-Time, Full-Fidelity Visibility at Scale

- Cloudera Secures DoD ESI Agreement to Expand AI and Data Access

- Zoho Launches Zia LLM, Introducing Prebuilt Agents, Agent Builder, MCP, and Marketplace

- Kyndryl Unveils Agentic AI Framework That Evolves to Drive Business Performance

- Ataccama Brings AI to Data Lineage to Help Business Users Understand and Trust Their Data

- GigaIO Secures $21M to Scale AI Inferencing Infrastructure Solutions

- Promethium Introduces 1st Agentic Platform Purpose-Built to Deliver Self-Service Data at AI Scale

July 16, 2025

- HERE Technologies Launches GIS Data Suite: A New Standard in Foundational GIS Data for Esri Users

- Honeycomb Announces Availability of MCP in the New AWS Marketplace AI Agents and Tools Category

- SiMa.ai to Accelerate Edge AI Adoption with Cisco for Industry 4.0

- Airbyte Data Movement Enhances Data Sovereignty and AI Readiness

- Data Squared Announces Strategic Partnership with Neo4j to Accelerate AI-Powered Insights for Government Customers

- Intel and Weizmann Institute Speed AI with Speculative Decoding Advance

- Atos Launches Atos Polaris AI Platform to Accelerate Digital Transformation with Agentic AI

July 15, 2025

- Nutanix Survey Finds Financial Firms Embracing GenAI but Struggling with Skills Gaps

- Qdrant Launches Qdrant Cloud Inference to Unify Embeddings and Vector Search Across Multiple Modalities

- Data Axle Reveals Most Brands Still Rely on Fragmented Customer Data

- Cyberlocke Expands Data Assurance Platform with New DQS Framework

- Coralogix Introduces MCP Server to Help Customers Build Smarter AI Agents

- Inside the Chargeback System That Made Harvard’s Storage Sustainable

- LinkedIn Introduces Northguard, Its Replacement for Kafka

- What Are Reasoning Models and Why You Should Care

- Scaling the Knowledge Graph Behind Wikipedia

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- Iceberg Ahead! The Backbone of Modern Data Lakes

- Databricks Takes Top Spot in Gartner DSML Platform Report

- Are Data Engineers Sleepwalking Towards AI Catastrophe?

- Rethinking Risk: The Role of Selective Retrieval in Data Lake Strategies

- Fine-Tuning LLM Performance: How Knowledge Graphs Can Help Avoid Missteps

- More Features…

- Supabase’s $200M Raise Signals Big Ambitions

- Mathematica Helps Crack Zodiac Killer’s Code

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- Confluent Says ‘Au Revoir’ to Zookeeper with Launch of Confluent Platform 8.0

- Data Prep Still Dominates Data Scientists’ Time, Survey Finds

- With $17M in Funding, DataBahn Pushes AI Agents to Reinvent the Enterprise Data Pipeline

- AI Is Making Us Dumber, MIT Researchers Find

- The Top Five Data Labeling Firms According to Everest Group

- ‘The Relational Model Always Wins,’ RelationalAI CEO Says

- Toloka Expands Data Labeling Service

- More News In Brief…

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- TigerGraph Secures Strategic Investment to Advance Enterprise AI and Graph Analytics

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- Promethium Introduces 1st Agentic Platform Purpose-Built to Deliver Self-Service Data at AI Scale

- Gartner Predicts 30% of Generative AI Projects Will Be Abandoned After Proof of Concept By End of 2025

- Campfire Raises $35 Million Series A Led by Accel to Build the Next-Generation AI-Driven ERP

- BigBear.ai And Palantir Announce Strategic Partnership

- Databricks Announces Data Intelligence Platform for Communications

- Code.org, in Partnership with Amazon, Launches New AI Curriculum for Grades 8-12

- Linux Foundation Launches the Agent2Agent Protocol Project

- More This Just In…